In data mining, much time is spent on collecting raw data and preprocessing data. Quite a few data mining research tasks require to download data from Internet, e.g. Wikipedia articles from Wikipedia, photos from Flickr, Google search results, reviews from Amazon, etc.

Usually general purpose crawlers, such as wget, are not sufficiently powerful and specialized in downloading data from Internet. Writing a crawler on our own is often required.

Recently I am doing an image related research project, in which I need to download a lot of tagged images from Flickr. I am aware that there is a Flickr downloadr, which uses Flickr API to download images. However 1) it only downloads licensed photos and 2) it cannot download the tags of a photo. Thus I decided to write one myself.

The input of the is a tag query, e.g. “dog”, # of photos to download and the disk folder to store the downloaded images.

Because the number of photos is quite big, so downloading them in parallel is critical. In .Net, there are several ways to do parallel computing. For IO intensive tasks, Async workflow is the best.

Tutorials for Async workflow

As Async workflow is one of the key features of F#, there are quite a few tutorials online for F# Async programming. Providing one more in this blog would be repetious.

Luke Hoban’s PDC 2009 talk, F# for Parallel and Asynchronous Programming, is very good for beginners.

Don Syme wrote 3 articles in a series. Highly recommended for experienced F# users!

The Flickr crawler

To write a crawler for a web site like Flickr, we need to 1) design the downloading strategy and 2) analyze the structures of Flickr.

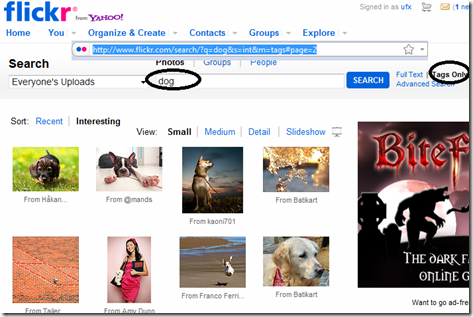

My strategy is to use the search query to search images with some specific tags and from the result page(as shown below), the url of each image is extracted, from which the image and its tags are then crawled.

Figure 1. Flickr search result page.

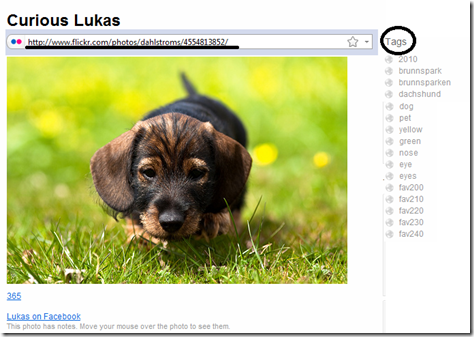

Figure 2. Flickr image page with an image and its tags.

So first we need a function to download a web page asynchronously :

- let fetchUrl (url:string) =

- async {

- try

- let req = WebRequest.Create(url) :?> HttpWebRequest

- req.UserAgent <- "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322)";

- req.Method <- "GET";

- req.AllowAutoRedirect <- true;

- req.MaximumAutomaticRedirections <- 4;

- let! response1 = req.AsyncGetResponse()

- let response = response1 :?> HttpWebResponse

- use stream = response.GetResponseStream()

- use streamreader = new System.IO.StreamReader(stream)

- return! streamreader.AsyncReadToEnd() // .ReadToEnd()

- with

- _ -> return "" // if there's any exception, just return an empty string

- }

fetchUrl pretends to be a Mozilla browser and can do some redirections if the url is slightly invalid. The current exception handling is very easy – just return the empty string for the web page. Notice that the return type of the function is Async<string>, thus it cannot be used to download images as images are of binary format, not text.

So the next task is to write a function to download images:

- let getImage (imageUrl:string) =

- async {

- try

- let req = WebRequest.Create(imageUrl) :?> HttpWebRequest

- req.UserAgent <- "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322)";

- req.Method <- "GET";

- req.AllowAutoRedirect <- true;

- req.MaximumAutomaticRedirections <- 4;

- let! response1 = req.AsyncGetResponse()

- let response = response1 :?> HttpWebResponse

- use stream = response.GetResponseStream()

- let ms = new MemoryStream()

- let bytesRead = ref 1

- let buffer = Array.create 0x1000 0uy

- while !bytesRead > 0 do

- bytesRead := stream.Read(buffer, 0, buffer.Length)

- ms.Write(buffer, 0, !bytesRead)

- return ms.ToArray();

- with

- _ -> return Array.create 0 0uy // if there's any exception, just return an empty image

- }

Next we write the code to get the url of an image and its tags from an image page(see Figure 2):

- let getBetween (page:string) (head:string) =

- let len = head.Length

- let idx = page.IndexOf(head)

- let idx2 = page.IndexOf('"', idx+len)

- let between = page.Substring(idx+len, idx2 - idx - len)

- between

- let getImageUrlAndTags (page:string) =

- let header = "class=\"photoImgDiv\">"

- let idx = page.IndexOf(header)

- let url = getBetween (page.Substring(idx)) "<img src=\""

- let header2 = "<meta name=\"keywords\" content=\""

- let tagStr = getBetween page header2

- let s = tagStr.Split([|','|], System.StringSplitOptions.RemoveEmptyEntries)

- let tags = s |> Array.map (fun t -> t.Trim())

- url, tags

Finally, write a function to work through every search result page, parse the result page and download the images in that result page:

- let getImagesWithTag (tag:string) (pages:int) =

- let rooturl = @"http://www.flickr.com/search/?q="+tag+"&m=tags&s=int"

- seq {

- for i=1 to pages do

- let url = rooturl + "&page=" + i.ToString()

- printfn "url = %s" url

- let page = fetchUrl url |> Async.RunSynchronously

- let imageUrls = getImageUrls page

- let getName (iurl:string) =

- let s = iurl.Split '/'

- s.[s.Length-1]

- (* images in every search page *)

- let images =

- imageUrls

- |> Seq.map (fun url -> fetchUrl url)

- |> Async.Parallel

- |> Async.RunSynchronously

- |> Seq.map (fun page ->

- async {

- let iurl, tags = getImageUrlAndTags page

- let icontent = getImage iurl |> Async.RunSynchronously

- let iname = getName iurl

- return iname, icontent, tags

- })

- |> Async.Parallel

- |> Async.RunSynchronously

- yield! images

- }

with a driver function to write all the images into hard disk:

- let downloadImagesWithTag (tag:string) (pages:int) (folder:string) =

- let images = getImagesWithTag tag pages

- images

- |> Seq.iter (fun (name, content, tags) ->

- let fname = folder + name

- File.WriteAllBytes(fname, content)

- File.WriteAllLines(fname + ".tag", tags)

- )

We’ve done! A Flickr image crawler in only about 120 lines of code. Let’s download some images!

downloadImagesWithTag "sheep" 5 @"D:\WORK\ImageData\flickr\sheep\"

It only costs less than 5 minutes to download 300 sheep pictures from Flickr.

Discussions

1. One of the strengths of F# Async programming is its ease for exception handling. In this example, the exception is handled immediately, we can also propagate the exception into an upper level function and handle it there. However, that would require more thinking….

2. I only parallelly download images in one search page. The program could be modified to parallelly process multiple search result pages, which is done sequentially now. If done in this way, we can see that, we can build a hierarchical parallel program: 1) at the first level, multiple search result pages are processed parallelly and 2) at the second level, images in a research result pages are downloaded parallelly.